Member-only story

How to model sequence learning problems?

In the previous article, we looked at the base and properties of sequence learning problems, and some examples of sequence learning problems in NLP, video, and speech data. In this article, let’s dive into the details of the modeling aspect of sequence-based problems

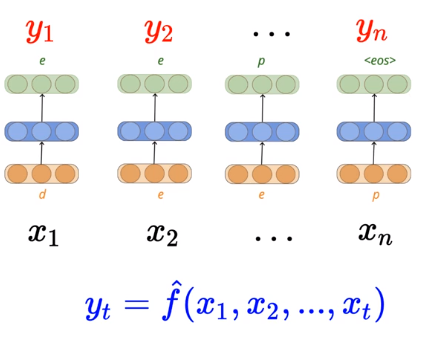

Let’s consider the task of character prediction (in which output is predicted at every time step)

The snippet above describes a basic model that takes in a number of inputs and predicts output for each time step in such a manner that output at any specific timestamp is dependent on current input as well as all previous inputs

Let’s call the “output at the time step 1” as “y₁”, “output at the time step 2” as “y₂”, and so on. In general, the “output at the t’th time step” is represented as “yₜ”

The true relationship is of such form that “yₜ depends on all the previous inputs”

It may not depend on all the previous inputs (or very much impacted by all previous inputs) but at least it depends on some of the previous inputs in addition to the current time step input

The function/model needs to approximate the relation between the input and the output (y_hat) and ensure that the function somehow depends on the previous input as well